Before we talk about the phases, let’s talk about the compiler first. Compiler is a program that runs the compilation processes. Where compilation is the process where the compiler reads a program written in one language (source language) and translates it into an equivalent program in another language (target language).

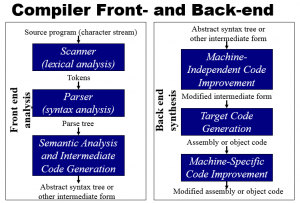

Compilation of a program proceeds through a fixed series of phases, where each phase use an (intermediate) form of the program produced by an earlier phase, and subsequent phases operate on lower-level code representations. These processes are divided into two main parts, which are front-end compiler (analysis part), and back-end compiler (synthesis part).

pic 01. Front- and Back-end Compiler

Front-end Compiler (Analysis part)

The analysis part breaks up the source program into constituent pieces and imposes a grammatical structure on them. It then uses this structure to create an intermediate representation of the source program. If the analysis part detects that the source program is either syntactically ill formed or semantically unsound, then it must provide informative messages, so the user can take corrective action. The analysis part also collects information about the source program and stores it in a data structure called a symbol table, which is passed along with the intermediate representation to the synthesis part. This part includes all the analysis phases (lexical, syntax, semantic analysis) and end at intermediate code phase.

Back-end Compiler (Synthesis part)

Meanwhile, the synthesis part constructs the desired target program from the intermediate representation and the information in the symbol table. This part includes the code optimization phase and code generation phase. Some compilers have a machine-independent optimization phase between the front end and the back end. The purpose of this optimization phase is to perform transformations on the intermediate representation, so that the back end can produce a better target program than it would have otherwise produced from an unoptimized intermediate representation.

In short, the front end analyzes the source program and produces intermediate code while the back end synthesizes the target program from the intermediate code.

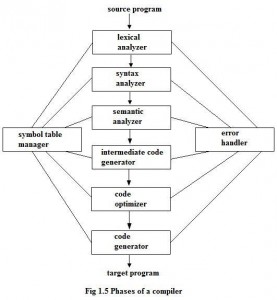

If we see the compilation process in more detail, we see that it operates as a sequence of phases, each of which transforms one representation of the source program to another. As we see from the picture below:

pic 02. Phases of Compilation

For further understanding, here below is the explanation for each phases.

Lexical analysis (Scanning)

The lexical analyzer reads the stream of characters making up the source program and groups the characters into meaningful sequences called lexemes. For each lexeme, the lexical analyzer produces as output a token in the form:

(token-name, attribute-value)

Then, it will passes on to the syntax analysis. In the token, the first component token-name is an abstract symbol that is used during syntax analysis, and the second component attribute-value points to an entry in the symbol table for this token. Information from the symbol-table entry is needed for semantic analysis and code generation.

For example, let’s say a source program contains the assignment statement:

position = initial + rate * 60

The characters in this assignment could be grouped into the following lexemes and mapped into the following tokens passed on to the syntax analyzer:

- position is a lexeme that would be mapped into a token (id, 1), where id is an abstract symbol standing for identifier and 1 points to the symbol table entry for position. The symbol-table entry for an identifier holds information about the identifier, such as its name and type.

- The assignment symbol = is a lexeme that is mapped into the token (=). Since this token needs no attribute-value, we have omitted the second component. We could have used any abstract symbol such as assign for the token-name, but for notational convenience we have chosen to use the lexeme itself as the name of the abstract symbol.

- initial is a lexeme that is mapped into the token (id, 2), where 2 points to the symbol-table entry for initial.

- + is a lexeme that is mapped into the token (+).

- rate is a lexeme that is mapped into the token (id, 3), where 3 points to the symbol-table entry for rate.

- is a lexeme that is mapped into the token (*).

- 60 is a lexeme that is mapped into the token (60).

Blanks separating the lexemes would be discarded by the lexical analyzer. Here below is the representation of the assignment statement after lexical analysis as the sequence of tokens:

(id, 1) (=) (id, 2) (+) (id, 3) (*) (60)

In this representation, the token names =, +, and * are abstract symbols for the assignment, addition, and multiplication operators, respectively.

Syntax analysis (Parsing)

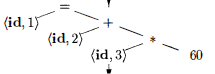

The parser uses the first components of the tokens produced by the lexical analyzer to create a tree-like intermediate representation that depicts the grammatical structure of the token stream. A typical representation is a syntax tree in which each interior node represents an operation and the children of the node represent the arguments of the operation. A syntax tree for the token stream from lexical analysis example is shown as the output of the syntactic analyzer in picture below.

pic 03. Syntax Analysis

This tree show the order in which the operations in the assignment are to be performed.

Semantic analysis

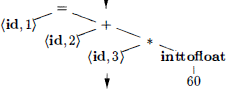

The semantic analyzer uses the syntax tree and the information in the symbol table to check the source program for semantic consistency with the language definition. It also gathers type information and saves it in either the syntax tree or the symbol table, for subsequent use during intermediate-code generation.

An important part of semantic analysis is type checking, where the compiler checks that each operator has matching operands. And the language specification may permit some type conversions called coercions. If the operator is applied to a floating-point number and an integer, the compiler may convert or coerce the integer into a floating-point number.

For example, based on our previous assignment statement:

pic 04. Semantic Analysis

Notice that the output of the semantic analyzer has an extra node for the operator inttofloat, which explicitly converts its integer argument into a floating-point number. It’s because the others beside 60 has been declared as float. So with coercions, the integer will be converted into floating-point number.

Intermediate code generation

In the process of translating a source program into target code, a compiler may construct one or more intermediate representations, which can have a variety of forms. Syntax trees are a form of intermediate representation; they are commonly used during syntax and semantic analysis. After syntax and semantic analysis of the source program, many compilers generate an explicit low-level or machine-like intermediate representation, which we can think of as a program for an abstract machine. This intermediate representation should have two important properties: it should be easy to produce and it should be easy to translate into the target machine.

For example, in our assignment statement, the result from semantic analysis will be turned into an intermediate form called three-address code:

t1 = inttofloat(60)

t2 = id3 * t1

t3 = id2 + t2

id1 = t3

Code optimization

The machine-independent code-optimization phase attempts to improve the intermediate code so that better target code will result. Usually better means faster, but other objectives may be desired, such as shorter code, or target code that consumes less power. For example, a straightforward algorithm generates the intermediate code, using an instruction for each operator in the tree representation that comes from the semantic analyzer. A simple intermediate code generation algorithm followed by code optimization is a reasonable way to generate good target code. The optimizer can deduce that the conversion of 60 from integer to floating point can be done once and for all at compile time, so the inttofloat operation can be eliminated by replacing the integer 60 by the floating-point number 60.0. Moreover, t3 is used only once to transmit its value to id1 so the optimizer can transform intermediate code into the shorter sequence:

t1 = id3 * 60.0

id1 = id2 + t1

There is a great variation in the amount of code optimization different compilers perform. In those that do the most, the so-called “optimizing compilers,” a significant amount of time is spent on this phase. There are simple optimizations that significantly improve the running time of the target program without slowing down compilation too much.

Code generation

The code generator takes an input an intermediate representation of the source program and maps it into the target language. If the target is machine code, registers or memory locations are selected for each of the variables used by the program. Then, the intermediate instructions are translated into sequences of machine instructions that perform the same task. A crucial aspect of code generation is the judicious assignment of registers to hold variables.

For example, using registers R1 and R2, the intermediate code might get translated into the machine code:

LDF R2 , id3

MULF R2 , R2 , #60 . 0

LDF R1 , id2

ADDF R1 , R 1 , R2

STF id1 , R1

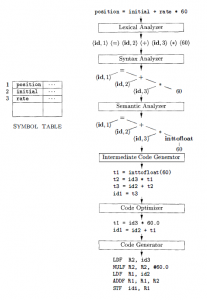

To sum things up, here’s the complete picture of the compilation process based on the assignment statement above:

pic 05. Complete Compilation Process

1501170740 – Pinto Luhur Karsanda 06PWT

References:

- Binus’ slide of Technics Compiler (Session 01-02)

- Aho, A.V., Lam, M.S.,Sethi, R., & Ullman, J.D. (2003). Compilers: Principles, Techniques and Tools. 1st Edition. Prentice Hall. California. ISBN: 0-321-49169-6.

- Phases of Compiler Design

- COP4020 Programming Languages – Compiler phases (Professor Xin Yuan)

- Phases of Compiler